Mar 1, 2024

Free

|

Paper

Paper Details

Author(s):

Muyang Li, Tianle Cai, Jiaxin Cao, Qinsheng Zhang, Han Cai, Junjie Bai, Yangqing Jia, Ming-Yu Liu, Kai Li, Song Han

Publishing Date:

Table of Contents

1. What is it?

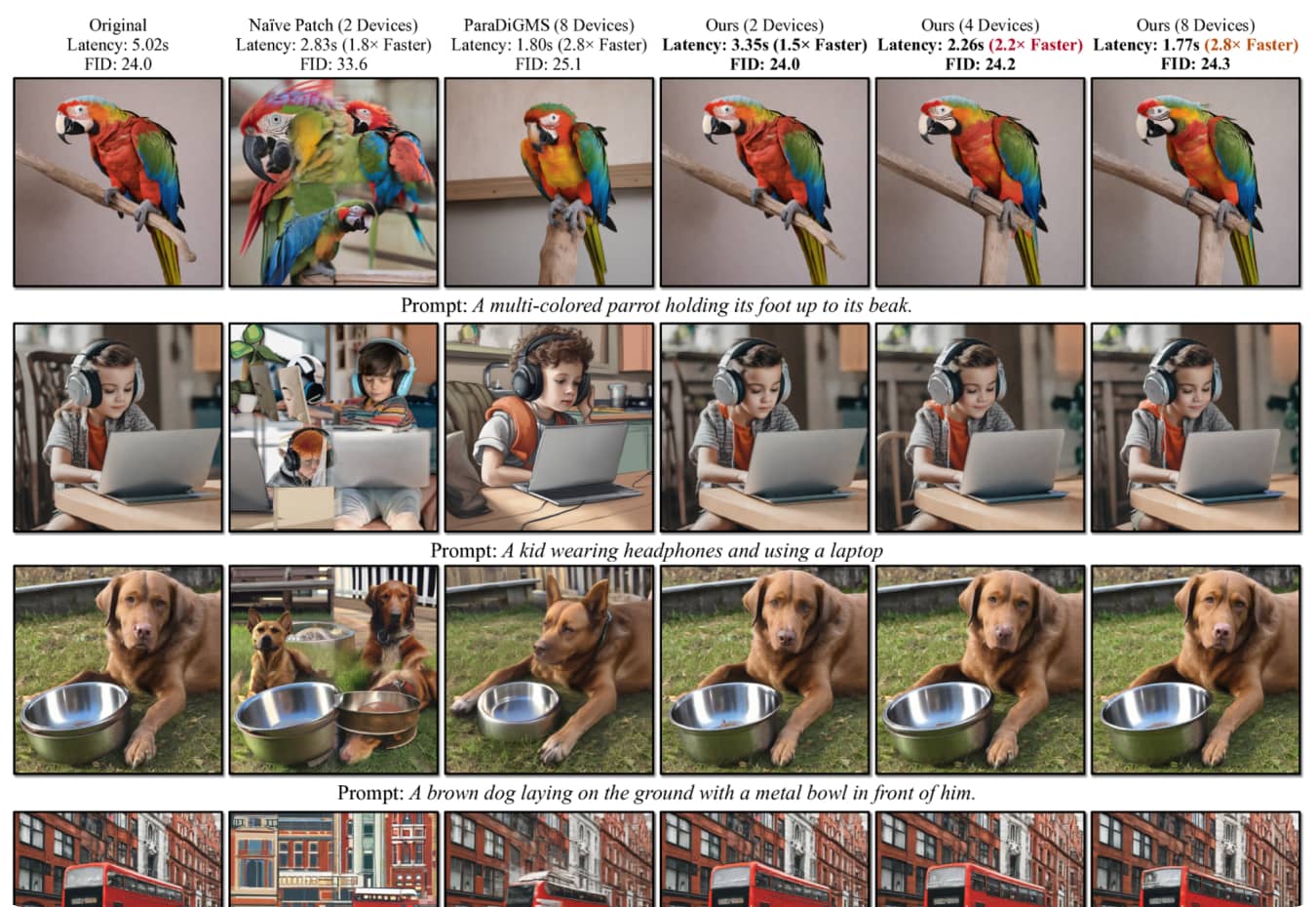

DistriFusion is a method that accelerates high-resolution image generation using diffusion models by leveraging parallel processing across multiple GPUs. It achieves this by splitting the input into patches, each assigned to a different GPU, and reusing pre-computed features from previous steps to provide context for the current step. This approach reduces communication overhead and allows for asynchronous communication between devices.

2. How does this technology work?

The core idea of DistriFusion is based on patch parallelism, which divides the input image into multiple patches and assigns each patch to a different GPU. To maintain interaction between patches and preserve image quality, the algorithm reuses pre-computed feature maps from previous steps as context for the current step. This approach allows asynchronous communication between devices, effectively hiding the communication overhead within the computation pipeline.

3. How can it be used?

DistriFusion can accelerate high-resolution image generation using diffusion models in applications such as image editing, video generation, and AI-generated content creation. By reducing latency, it can enable more responsive interactions for users, leading to a smoother creative process. The technology is particularly useful for industries like photography, illustration, social media influencers, animators, designers, small company owners, startups, movie makers, fashion designers, 3D artists, and people learning AI image, video, and audio generation.

4. Key Takeaways

DistriFusion leverages multiple GPUs for parallel processing to accelerate high-resolution image generation using diffusion models without sacrificing image quality.

The method is based on patch parallelism, which divides the input into patches and assigns each patch to a different GPU.

Pre-computed feature maps from previous steps are reused as context fo r the current step, allowing for asynchronous communication between devices.

DistriFusion achieves up to a 6.1× speedup on eight NVIDIA A100s compared to one GPU.

The technology can be used in various applications such as image editing, video generation, and AI-generated content creation.

5. Glossary

Diffusion Models: A family of generative models that learn data distribution by gradually adding noise and then training a neural network to reverse this process.

Patch Parallelism: It is an approach for parallel processing in which the input is divided into patches, each assigned to a different GPU.

Asynchronous Communication: A communication method that allows devices to communicate with one another without waiting for a response, effectively hiding communication overhead within the computation pipeline.

6. FAQs

a. Can DistriFusion be applied to any diffusion model?

Yes, DistriFusion only requires off-the-shelf pre-trained diffusion models and is applicable to most few-step samplers.

b. Are there any limitations to using DistriFusion?

DistriFusion has limited speedups for low-resolution images as the devices are underutilized. Additionally, the method may not work for extremely-few-step methods due to rapid changes in denoising states.

c. How can DistriFusion improve the efficiency of AI-generated content creation?By reducing latency through efficient parallel processing, DistriFusion enables more responsive user interactions, leading to a smoother creative process. This advancement enhances the efficiency of AI-generated content creation and sets a new benchmark for future research in parallel computing for AI applications.

d. What are some potential societal impacts of DistriFusion?While DistriFusion can enable more responsive interactions for users, generative models can be misused. To mitigate potential harms, it's essential to specify authorized uses in the license and promote thoughtful governance as this technology evolves.

Disclaimer:

This text has been generated by an AI model, but originally researched, organized, and structured by a human author. The grammar and writing is enhanced by the use of AI.